Full Speed Ahead: How Toyota Research Institute is Accelerating its Machine Learning Algorithms with Mapillary

Recent advances in Deep Learning are boosting autonomous driving technology towards reality. However, deploying a novel deep learning algorithm into a real-world product is not a trivial undertaking. A practical deep learning module for autonomous vehicles requires robustness and generalization at a very high standard as the real-world is the most diverse dataset one can expect. Dramatic performance degradation can be expected if an algorithm was prototyped and explored in a small data domain—something that also decelerates the deployment process.

To limit the gap between our research and the real-world, we leverage Mapillary Vistas, a large publicly available street-level dataset, to benchmark our algorithms. Its diversity in both source images and annotations helps us keep a strong sense of domain scale in the loop of our algorithm design.

Here are some highlights of how we are using the Mapillary Vistas Dataset in our machine learning algorithm development with supervision and beyond.

Mapillary Vistas in supervised algorithms

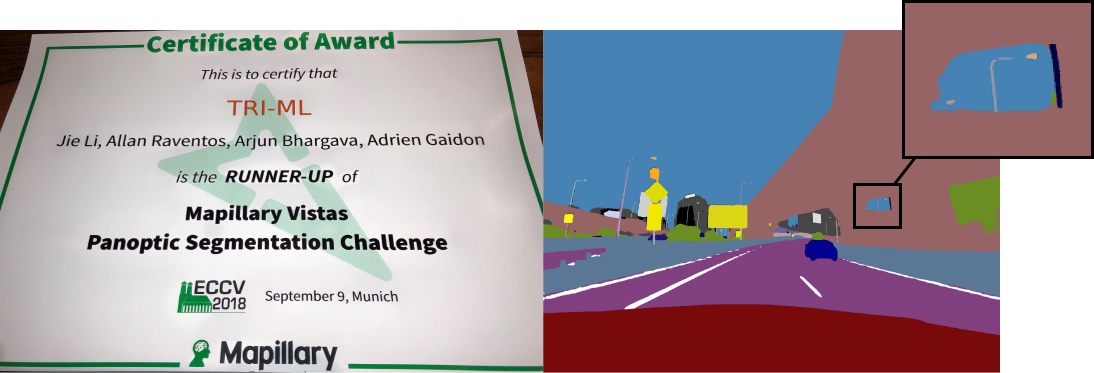

In the COCO and Mapillary Joint Recognition Challenge at CVPR last year, Our team, TRI-ML, won the runner-up prize on Mapillary Panoptic Segmentation track with the best performance on instance recognition and segmentation. In this competition, we proved ourselves to be among the best in scene understanding on large-scale datasets.

In one of our most recent works on panoptic segmentation, TASCNet, we presented a unified network that not only achieves state-of-the-art performance but also doubles the model efficiency compared to previous works, making it a practical model for real-world integrated systems.

Mapillary Vistas beyond supervision

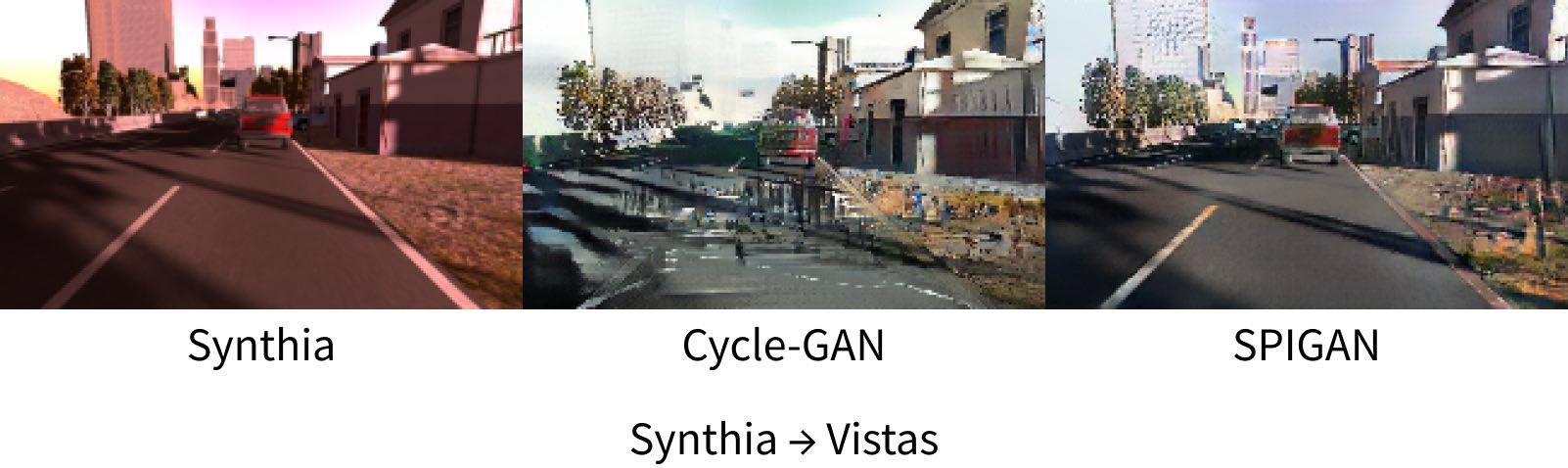

We are also using Mapillary Vistas in our algorithms beyond supervision. In our recent publication on ICLR2019, “SPIGAN: Privileged Adversarial learning from Simulation”, we presented an advanced domain adaptation algorithm for semantic segmentation tasks. In this work, the segmentation network is trained with image-label pairs in the simulation environment (source domain) without any annotation from the real-world dataset (target domain).

In our paper, we proposed to use the privileged information from the simulator (i.e. depth) in addition to the simulated images. We leveraged privileged information as a geometric regularizer to the image transformation process using a generative adversarial network (GAN) where the network transfers the source domain image into the target domain. This regularization dramatically increases the segmentation performance in the target domain. On the Mapillary Vistas dataset, the contribution of privileged information is more significant compared to other datasets. When the target domain is more diverse, GANs tend to fail by generating new content in the source image that breaks the consistency between the image and its label, so a powerful regularizer is more important.

At TRI, we look forward to developing more advanced scene understanding algorithms at scale and advancing Toyota’s automated driving capabilities with a vision of saving lives, expanding access to mobility, and making driving more fun and convenient.

/Jie Li, Research Scientist, Toyota Research Institute

The Mapillary Vistas Dataset is used to train perception models for autonomous driving. It is the world’s largest and most diverse street-level imagery dataset with pixel‑accurate and instance‑specific human annotations for understanding street scenes around the world.